What is churn prevention?

Churn prevention refers to the proactive process of identifying customers' frustrations and friction points and addressing them through targeted interventions such as fixing product issues or offering support to stop them from leaving your product or service.

In B2B SaaS, churn prevention can happen at two levels:

In this guide, we'll be focusing on user churn prevention, understanding why users disengage, and how to re-engage them before it impacts the broader relationship with the business account.

💡 Related guide: The Ultimate Guide to Reducing Customer Churn in SaaS

How to implement a churn prevention strategy

If you want to stop churn in its tracks, the key is to catch the warning signs early and engage with your users in the right way. Here's how to build a churn prevention strategy that works:

Identify churn windows using retention analysis

Retention analysis is not just to track how many users stick around. It also reveals the point at which users begin to disengage. A drop in the retention curve is usually a sign that users are disengaging, and churn is spiking.

To do retention analysis, set up key events based on your product journey - like signed up or completed onboarding - and plot a retention chart. Then, track how many users return on Day 1, Day 2, Day 3, and so on.

What you're looking at here is the biggest drop in the retention curve. For example, if 70% of users return on Day 2 but only 30% return on Day 3, that sudden drop indicates your churn window. That's the point where most users disengage and stop coming back.

💡 Related guide: User Retention: How to Increase & Measure it With Key Metrics

Create cohorts to isolate at-risk groups

After you've figured out when most users drop off, the next step is to create cohorts of the users who might fit in that window. Cohorts are groups with shared characteristics or behaviors, helping you isolate patterns within specific user segments.

There are several cohort types. Each cohort gives you a view from a different lens. Here are a few cohorts you could create:

Behavioral cohorts: These cohorts are based on specific user actions, or lack thereof, during their journey with your product.

Acquisition channel cohorts: This group of users is based on how they first discovered your product. It could be through social media ads, email campaigns, organic search, or paid search.

Demographic cohorts: These segment users by demographic characteristics, such as industry, company size, or geographical location.

Once you've created these cohorts, review the retention data again, this time comparing different cohorts within your identified churn window. This helps you pinpoint which user groups are at the highest risk and prioritize your intervention efforts.

For example, your product team tells you that most users are expected to complete a short onboarding checklist during their first few sessions. So, you create 2 behavioral cohorts to analyze this:

Now, you check retention for both groups on Day 3, which is your churn window. You notice:

That's a big gap. It tells you that completing the checklist is strongly linked to retention, and users who skip it are much more likely to churn. So, Group B becomes your at-risk segment, and you now have a specific aspect to target and improve.

Analyze behaviors within the at-risk cohort

Once you've identified your at-risk cohort, the next step is to dig into what's going wrong. To do this, run a funnel analysis to track key actions in your user journey.

For example: signed up → viewed dashboard → completed onboarding → created first project.

To go even further, consider using path analysis to visualize and track the sequence of actions that users take within a product. Depending on the kind of product you have, you may ask:

This shows you not only their behavior before they drop off but also if they're taking any unexpected paths that might indicate confusion or frustration.

-

Now that you've identified where your at-risk users are struggling, the next step is to develop interventions that can address these issues. A good practice is to formulate a hypothesis about what action could help users move past the friction points and engage more with your product.

To form a hypothesis, use this simple template: "If we [make this change], then more users will [complete this key action], which will improve retention and prevent churn."

For example, if your funnel analysis shows that many users never complete onboarding, you might form hypotheses like:

If we add a progress bar to the onboarding flow, more users will complete it.

If we send a reminder email to users who haven't finished onboarding, more of them will return and complete it.

If we simplify the onboarding checklist, more users will finish it and stick around.

To decide which ideas to prioritize and test, evaluate each one using a simple framework like ICE: Impact (how much it could reduce churn), Confidence (how likely it is to work), and Effort (how hard it is to build). Focus on ideas with the highest potential and the lowest complexity.

Test interventions on users showing early signs of churn

Now that you have your hypotheses in place, the next step is to test them with real users. This allows you to see if your changes actually make a difference before rolling them out to everyone.

To start, identify the test group by using the same early-warning signals you found in your at-risk cohort.

Next, split those users into a control group and a variant group:

The control group experiences the product as is, with no changes.

The variant group receives the new experience with intervention, whether that's a progress bar, a tooltip, a reminder email, or another tweak.

Decide on a test duration that's long enough to yield meaningful results, depending on your user volume.

When the experiment ends, compare the performance between the control and variant groups and try to answer these questions:

Did the variant group show higher completion of the target action (e.g., onboarding checklist) than the control group?

Did Day 3 (or Day 7) retention improve by a meaningful percentage?

Is the observed lift statistically significant?

This testing process ensures that you're making data-driven decisions and not just assumptions.

Deploy proven changes

Once your test has shown that an intervention works, it's time to deploy it on a mass scale.

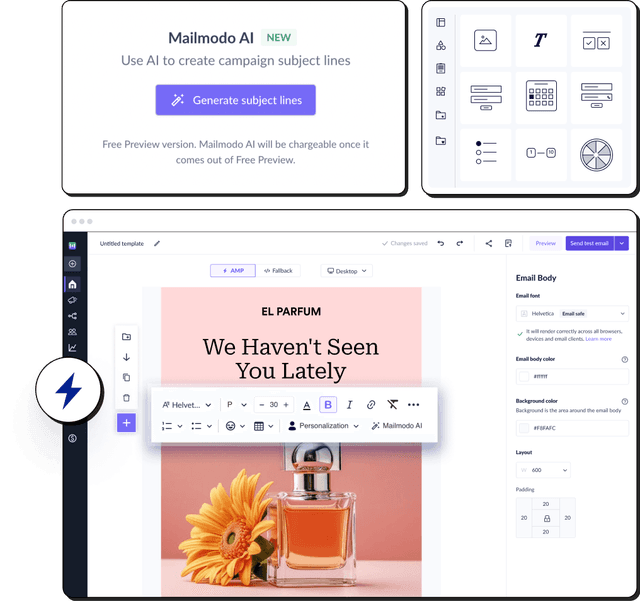

This could mean integrating a new feature like a progress bar, sending reminder emails, or adjusting any other element you tested and found effective.

You must also continuously monitor how the new changes are performing. Make sure you are tracking the right metrics. This will help you spot any unexpected issues or changes in user behavior once the intervention is rolled out at scale.

Finally, collect user feedback about the new changes. Even though your intervention was successful in the test, the real-world experience could reveal new friction points. Look for user comments, complaints, or behavior that suggest the change isn't working as smoothly as expected. This feedback is critical to refining and fine-tuning the final experience.

Challenges in implementing a churn prevention strategy

Executing a churn prevention strategy comes with its own set of challenges. Below are some of the most common pitfalls teams run into and how to avoid them:

Designing interventions that actually work

Designing effective interventions is harder than it sounds. A tooltip might go unnoticed, a banner might feel annoying, and emails might never get opened. Just knowing what behavior to drive doesn't guarantee you'll be able to drive it in a way users care about.

To overcome this challenge, start small and test simple variations before moving on to complex ones. You can use A/B testing to compare message timing, format, and copy and must collaborate closely with design and sales teams to embed the intervention naturally into the product experience.

Additionally, you can tie the intervention to an immediate reward to make it more effective.

Reacting to the wrong churn signals

Sometimes, churn isn't driven by product friction but is caused by external factors like pricing, seasonality, or market shifts.

To overcome this, supplement behavioral data with contextual signals like user feedback, cancellation reasons, support tickets, or NPS responses. You can use surveys and post-churn interviews to uncover motivations or frictions that you can't see the numbers.

Maintaining progress after a success

Let's say your intervention worked, and churn has dropped by 10%. Amazing. But what happens next? Many teams celebrate and move on, never checking back to see if the results hold. Eventually, churn creeps back in, or the same issue shows up in a new user segment.

To beat this, after each successful test, document your learnings in a shared playbook: the problem, the fix, the metric lift, and what conditions made it work. Continuously monitor the impact using the same retention window to ensure that the effects are long-term.

Final thoughts

Preventing churn starts with truly understanding your users and the points at which they may disengage. By using retention data and behavioral insights, you can identify when users are at risk and proactively intervene to keep them engaged.

When developing your churn prevention strategy, put yourself in the users' shoes. Think about the moments in their journey where they may encounter friction and how you can address it. Work closely with your product and customer teams to ensure your interventions align with the user experience and feel seamless.

By customizing your tactics to address users' pain points and behaviors, you can reduce churn, foster loyalty, and ultimately improve long-term retention.